Trust Infrastructure for AI Agents: Architecture Insights from LFDT Belgium Meetup

Trust Infrastructure for AI Agents: Architecture Insights from LFDT Belgium Meetup

The Agentic Web is emerging rapidly, with organizations rushing to build AI agents. Yet a fundamental question deserves more attention: How do we establish trust when autonomous systems make consequential decisions without human oversight?

To explore this challenge, Cyber3Lab brought together Andor Kesselman (DIF Labs) and Geoff Pirie (Inrupt), two industry leaders actively building trust infrastructure solutions. Our recent LFDT Belgium Meetup on Trusted AI Agents captured their insights on these critical challenges.

Here's what we learned.

Watch our LFDT Meetup's recording

Main section

Quick facts

/

AI agents execute real-world tasks while LLMs only generate text responses.

/

Current identity systems like OAuth cannot handle dynamic AI agent capabilities.

/

Moving from single agents to multi-agent networks exponentially increases attack surfaces.

/

Trust infrastructure must be built now before widespread deployment locks in bad security.

/

The agentic web will enable agents to cross organizational boundaries autonomously.

What Industry Leaders Revealed About AI Agent Trust

Large Language Models revolutionized AI but remain fundamentally constrained - trapped within training data, unable to access real-time information or take autonomous actions. This limitation sparked the emergence of AI Agents - systems that combine LLM reasoning with tools, memory, and real-world capabilities.

Yet this evolution creates a critical challenge: How do we trust systems that make consequential decisions without human oversight? Imagine your AI agent booking flights, transferring money, and sharing passport details while you sleep.

Bringing Together the Architects of Trust Infrastructure

Recognizing this emerging challenge, Cyber3Lab assembled experts actively working on trust infrastructure solutions for our LFDT Belgium Meetup on Trusted AI Agents.

Andor Kesselman chairs the Technical Steering Committee at DIF and leads multiple foundational working groups focused on trusted AI agents, decentralized web nodes, and trust registries.

As founder of AgentOverlay and leader of MIT's Project NANDA Bay Area chapter, he's building core infrastructure for the agentic web.

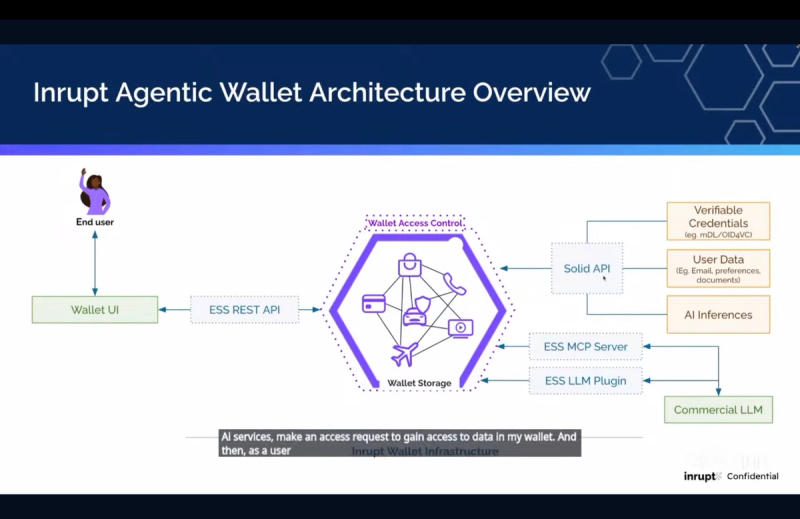

Geoff Pirie, Director of Product at Inrupt, leads development of Agentic Wallets™ using the Solid protocol and Model Context Protocol integration.

Their insights revealed why trust isn't optional for AI agents. It's foundational infrastructure that will determine whether the agentic web becomes empowering or dangerous.

Building Individual Agent Trust: Agentic Wallets in Practice

The first trust challenge users face is deceptively simple: how do you give your personal AI agent access to exactly the data it needs without compromising your privacy? Traditional systems force an all-or-nothing approach: either grant broad access to your information or limit the agent to generic responses that provide little value.

This creates a fundamental dilemma. Users want personalized AI experiences that understand their preferences, habits, and context. But they also want granular control over what data gets shared, when it's accessed, and how it's used. Current platforms typically require users to surrender comprehensive data access upfront, creating privacy risks and reducing user agency.

Geoff provided a live demonstration of working solutions that addresses this core challenge. His demo showed how users can maintain granular data control while enabling AI personalization through Solid protocol and Model Context Protocol (MCP) integration. Users approve access to specific data containers while maintaining cryptographic access controls.

When Agents Become Networks

The trust challenge transforms dramatically when moving from single agents to multi-agent systems. MIT's Project NANDA envisions an 'agentic web' where agents cross organizational boundaries like humans navigating different companies - creating exponential scaling complexity where each additional agent introduces new connection points, delegation chains, and failure modes absent in single-agent systems.

Identity becomes the fundamental building block for this network vision, enabling agent-to-agent messaging, discovery, interoperability, and state management. Agent identities differ radically from human ones - they feature dynamic capabilities that change during runtime, require complex lifecycle management, and operate at massive scale. Current systems like SPIFFE and WIMSE (designed for microservices) don't handle the dynamic, information-rich nature of AI agents.

This creates "Know Your Agent" (KYA) challenges. Traditional systems like OAuth lack complex delegation and context awareness that agents require.

Security Challenges at Scale

The agentic web introduces unprecedented security challenges that go beyond traditional AI risks. Multi-agent systems face the Berkeley-researched Multi-Agent System Failure Taxonomy, which Andor highlighted: specification issues create system exploits, inter-agent misalignment enables adversarial coordination, and task verification failures allow malicious agents to operate undetected.

Advanced attack vectors compound these risks: Sybil attacks in software supply chains, unauditable delegation chains, and the challenge of proving delegation originates from humans rather than compromised agents. Solutions include Trusted Execution Environments and cryptographic personhood credentials, but the attack surface expands exponentially as agent networks scale.

Building Trust Infrastructure

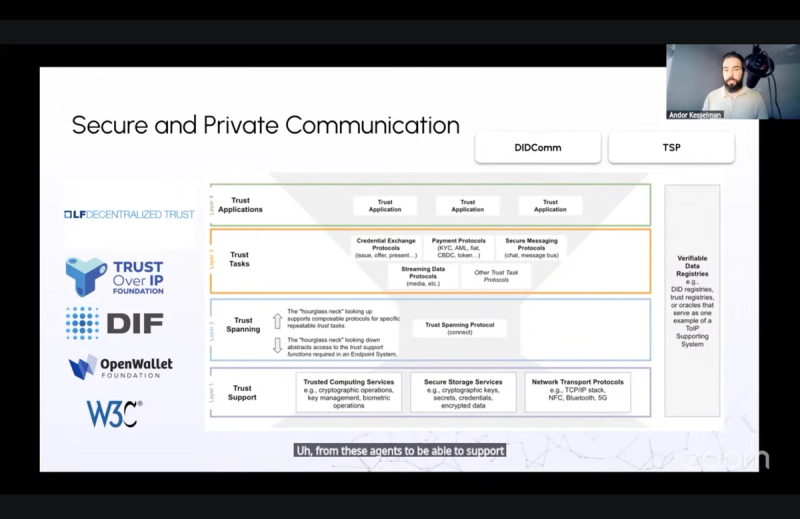

Trustworthy agents require several coordinated technical elements that our speakers are actively developing. Communication forms the foundation - agents need protocols that preserve trust across organizational boundaries.

While MCP and Agent-to-Agent (A2A) messaging handle direct interactions, Trust Over IP's Trust Spanning Protocol (TSP) provides the broader solution: universal interworking for AI interactions that enables different identity systems to interoperate while maintaining trust guarantees.

Beyond communication, agents need sophisticated access control - systems that are dynamic, chainable, and revocable across jurisdictions. They also require discovery mechanisms like NANDA Index that can handle both static registries and dynamic capabilities, plus policy frameworks capable of managing both deterministic rules and the non-deterministic behavior inherent in AI systems.

The current landscape shows multiple competing protocols, but practical adoption pressures will likely drive convergence toward one or two dominant approaches for interoperability - similar to how the early internet's various networking protocols eventually consolidated around TCP/IP.

Key Insights from Trust Architects

Our assembly of these two complementary experts revealed critical perspectives from those actually building the solutions:

The SSI Breakthrough Moment: Andor suggested this could be the "killer use case" for Self-Sovereign Identity, finally making decentralized identity truly relevant by forcing better authentication and delegation tools that traditional systems simply cannot provide.

Working Solutions Today: Geoff demonstrated that granular data control for AI agents isn't theoretical. Inrupt has working implementations that solve consent management and privacy preservation while enabling personalized AI experiences.

Protocol Convergence: While multiple competing protocols may emerge initially, practical adoption will likely drive convergence toward one or two dominant approaches, similar to past technology format wars.

Mainstream Transformation: Though currently specialized, these trust infrastructure needs will rapidly become mainstream requirements as AI agents gain autonomy and widespread adoption.

The Urgency of Trust Infrastructure

The timing of these challenges isn't coincidental. As theoretical trust problems become practical infrastructure needs, our LFDT meetup captured insights from experts building both data control and identity coordination solutions.

The window for establishing the right foundational infrastructure is now, before widespread deployment creates irreversible dependencies on inadequate trust models.

~~~~~~~~

Andor's impressive slidedeck is available through his LinkedIn Post

Bottom section

Cyber3Lab's Research Ambitions

The insights from our LFDT meetup confirmed that AI agent trust infrastructure sits at the intersection of our existing capabilities in Self-Sovereign Identity, Verifiable Credentials, and decentralized systems. As these challenges move from theoretical to practical, we're positioned to contribute meaningfully alongside the infrastructure architects we assembled.

Ready to explore the future of AI agent trust infrastructure? Connect with Cyber3Lab to discuss collaboration opportunities as we help shape these foundational technologies.

Contributors

Authors

/

Shane Deconinck, Web3 Lead

Want to know more about our team?

Visit the team page

Last updated on: 9/30/2025

/

More stuff to read